In the previous post we were wrapping our backend server into a lambda function. It is working just fine, but without a persistent storage layer there is no much worth to it; and the in-memory solution we have right now is everything but ideal for a serverless architecture.

The store interface we work with for the storage layer is a rather simple one:

- there is a Load method, which returns the

Sessionobject from the database if it’s present, otherwise returns an error - and a Save, that inserts a

Sessionwith given ID

Couldn’t get any simpler, right?

The in-memory implementation uses a simple map for storing and retrieving the data and it’s prefect for the job; so let’s give a try for AWS’s flagship, key-value database, the DynamoDB - which, if you strip away all the fancy features, is just a simple map after all…

In this post we take a look on how optimistic locking is different from the

usual, pre-locking approach and prepare the store interface for optimistic

locking. In the next post we’ll have the DynamoDB implementation of this

store that is using optimistic locking.

This item is the fifth one on a series called Pajthy to AWS where I try to capture the process of migrating one of my open-source pet projects into a serverless setup in AWS.

Dealing with race conditions

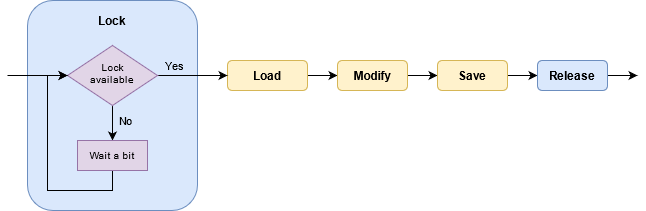

The current server implementation uses the classic locking solution: the

handler puts a lock on the referenced session before reading the data from

the store and releases that lock after the write is done; if another request

tries to work on the same session it won’t be able while the lock is on, it

has to wait until the current thread releases it. Far from elegant, but it’s a

really simple solution:

Before doing the read-modify-write cycle we want to make sure that there are no concurrent changes to our data. For achieving this the thread is waiting for the lock of the session to be free; once it is, the RMW cycle gets executed and the lock released back to the next thread.

Bad news: the locks we need to do this with DynamoDB are not first class citizens. So now we are at a decision point: either find a shared lock implementation for DynamoDB or change the core behavior to handle race conditions in a more fitting way.

Since the purpose of this series would be to migrate the existing code into

serverless, the former would be the automatic choice; however using a shared

locking solution for DynamoDB (either by implementing or using an existing one)

would at least double the number of our database operations: for every get

and put in the sessions table (loading a session and updating later) there

would be at least one of each in the locks table as well (acquiring and

releasing that lock). Twice as many DynamoDB request means twice the money for

running the service later: with dynamo we pay after the number of read and write

operations 💰. So instead of throwing money out of the window, let’s

change the core instead!

Optimistic locking

Optimistic locking is one of the simplest algorithms that can deal with concurrent write operations, and thus it’s usage is widespread among NoSQL developers; it’s ideal when the data updates are less frequent. Luckily pajthy falls under this category since the most write-intensive phase is when a vote is happening; even during that it’s rarely goes above a single write per second.

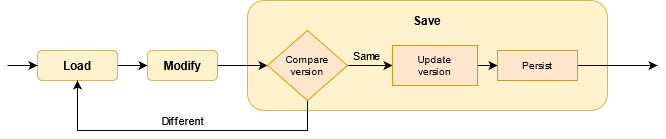

The most important part of this strategy is the version field we introduce to our data: we only update the database if the version value in the database equals to the one we originally read from the database. If the version differs it means the data got updated by another thread, so for data consistency’s sake we should repeat the whole read-modify-write cycle.

After reading and modifying our data the Save method will update the session

with the updated data and version if the version in the database matches to

the version it had in the data during the read. In case the two versions do

match, Save proceeds; otherwise it restarts the cycle with a fresh read (to

get the most recent version from the database).

The only requirement towards the database is to support conditional write operations - and we are in luck again, since conditional writes are supported by DynamoDB and we can work it into our local map store as well.

Changing the core code

Let’s start at the beginning and change the interface first:

| |

Here at line 19 we add version as a new parameter for the Save method.

I choose to use the variadic approach here: since golang does not have optional

parameters for functions, this is the nicest way to “emulate” them in my

opinion, since there are cases when we don’t have a version (like when saving a

session the first time) and other times we have. The go-recommended way in

these cases is to have two methods (in our case this could be a Create and an

Update).

After that in line 22-25 the store.Session is defined: it consist of the

domain.Session and a version. Our version will be a randomly generated UUID.

There is an “invisible” spec on Store too: from now on Save could return

ErrVersionMismatch if the supplied version does not match the one in the

store.

To standardize the read-modify-write cycle there is a wrapper method here:

| |

As you can see the ReadModifyWrite method has three parameters: the id of

the session in our focus, a Store implementation on which we can call the

Load and Save methods, and a function called modify: it has the freshly

loaded Session from the store in its parameters and returns the modified one

that should be saved back.

The method does exactly what it’s name suggest: reads the data, applies the

changes through the modify parameter function then tries to write the modified

session back to the store. The trick is at line 47: in case the Save

method returned ErrVersionMismatch we wait 20ms and start the cycle over again

from the top. There is a failsafe in line 35 that won’t let this cycle go

on forever: after 5 retries the method returns with the same

ErrVersionMismatch error and let’s the caller to deal with the issue.

Here is an example for using the ReadModifyWrite():

| |

The business logic when a user wants to join a voting session is encapsulated in

the anonymous modify function between lines 121-128; if there is no user

with the requested name in the session then add it and return the modified s

object.

In the switch starting at line 131 we deal with the outcome of the

ReadModifyWrite method. This piece of handler for example returns an 500

Internal Server Error when the error was ErrVersionMismatch.

And if you were wondering, the InMemory implementation for the Store#Save

looks like this:

| |

Now, after we took one step back and two forward, there is nothing between us

and working on what I promised in the intro: having a DynamoDB backed Store.

Unfortunately this post got pretty wordy already even without that 🙄.

Stay tuned!